Hi Sam! Sorry for the delay I wanted to think about the best answers. I will try to be brief as I need to head out shortly, but I thought I would jump in and respond a bit.

Regarding Nindami: It is a nice piece of software and looks very useful for someone getting started. My issue is that at it’s heart, it is running semantic segmentation on individual images. There are going to be limits to how effective that can be. Note for example that the sample images show clean bare dirt with clear images of plants all standing on their own. When you have things like cover crops underneath the plants, computer vision gets much harder. In that case you need to include things like temporal consistency, which basically allow you to get more information to really narrow things down.

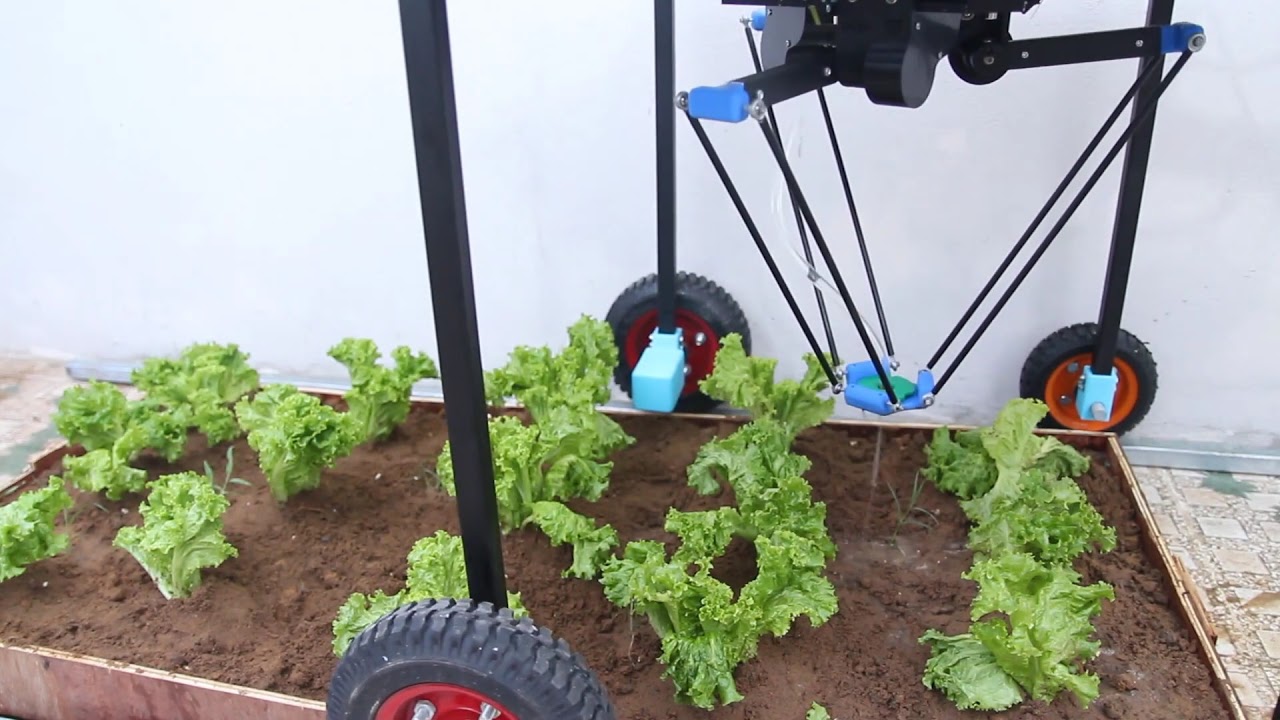

You can also look at the 3D structure of plants. There is something called Structure From Motion which allows you to take multiple images and reconstruct the 3D geometry of the scene. This would allow you to potentially understand the full 3D geometry of the scene and do plant identification not just in a 2D image but in full 3D. Only plants move, so we would need a network akin to those that do structure from motion on moving people. Understanding the 3D structure of the scene would allow a robot arm to carefully pull a week right next to a crop plant, something that would be very difficult to do using semantic segmentation alone.

So the question becomes, should we start with the basic system and expand it, or go straight to the advanced system? Probably we should do both. But I want to make sure I am building a system that is capable of doing the more advanced things, so we build it once and then spend time developing the algorithm. That is why I worry about things like ultra precise GPS stamps, which will be important for training structure from motion algorithms. One of the most computationally intensive tasks about structure from motion is resolving the relative camera poses, but if we build our GPS system right it can eliminate a lot of computation there.

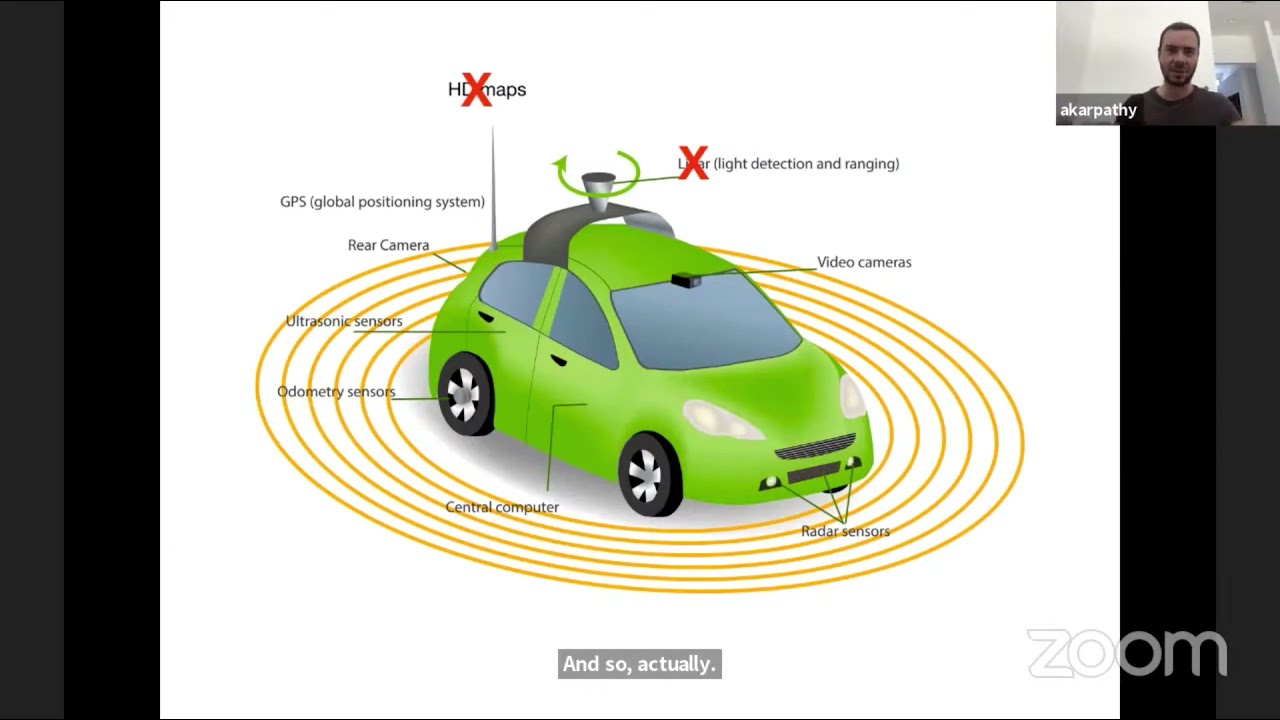

The Tesla vision system I talk about is what they call a “hydra” architecture. They provide a lot of detail about how it works, and I think we need to adopt something that, while much simpler than theirs, has some of the same attributes. And that is not going to come from grabbing an off the shelf semantic segmentation system.

I also do want to highlight that I am basically not ready to work directly on the architecture for the vision system, as we are still finalizing the basic hardware and working on a robust camera system.

As far as motor controllers - it won’t be hard for someone to integrate with other motor controllers. But we already have really nice integration of Odrive and have done a lot of testing. Three of those controllers might be cheaper than two odrives, but with three odrives you can run two arms at once, which is more where I would like to go. That said we can support both. One question I have is whether that controller is available for purchase. We are already going to go in to production at some point on our Acorn PCBs and we could expand to making our own motor controllers, but we want to limit how much work we take on at once. I know Oskar at Odrive personally and I really support all their work, despite my personal wishes that they went fully open hardware. I have no issue continuing to use their controllers for now, though I share your desire to ultimate use open controllers.

You asked about GPS stamping in ROS. We could do it in the rapsberry pi, whether we use ROS or just our python stack. But that adds extra latency. I want to use a stereo or quad camera system and the cameras need a trigger signal to activate their shutters at the same time. So if we have a microcontroller doing 100HZ sensor fusion for accurate GPS positioning, we can have the microcontroller also running the camera trigger signal and produce extremely accurate GPS stamps. The more accurate they are, the less compute is needed to train a structure from motion system.

It is important to me to be able to produce high quality datasets that researchers can use to help solve the tough problems. So for example I want a neural network to help with 3D structure from motion of plants. I have not been able to find any dataset for that. And I personally am not capable of solving that problem from a machine learning perspective. But I CAN produce highly accurate GPS stamped images and point the cameras at plants all day long. So we produce that dataset, and work with university researchers to produce new techniques for structure from motion on plants. They produce research for their work and help solve an extremely thorny problem for us. At least, that is my thinking.

Okay I should run but there’s a few shotgun answers. I can come back later and take a look at some of the videos you shared. I have seen the IGUS delta before but it’s something like $7k and I know we can make a delta for less than that.